Tutorial: Build a Smart Call Center Analysis Gen AI App with MLRun, Gradio and SQLAlchemy

Developing a gen AI app requires multiple engineering resources, but with MLRun the process can be simplified and automated. In this blog post, we show a tutorial of building an application for a smart call center application. This includes a pipeline for generating data for calls and another pipeline for call analysis. For those of you interested in the business aspect, we added information in the beginning about how AI is impacting industries.

You can follow the tutorial along with the respective Notebook and clone the Git. Don’t forget to star us on Github when you do! You can also watch the tutorial video.

AI is changing our economy and ways of work. According to McKinsey, AI’s most substantial impact is in three main areas:

Redistributing profit pools – AIaaS (AI-as-a-Service) is added to the value chain, resulting in new solutions and entire value chains being replaced.

When building a gen AI app and operationalizing LLMs, it’s important to perform the following actions:

Now let’s dive into the hands-on tutorial.

The following tutorial shows how to build an LLM call center analysis application. We’ll show how you can use gen AI to analyze customer and agent calls so your audio files can be used to extract insights.

This will be done with MLRun in a single workflow. MLRun will:

As a reminder, you can:

This comprises six steps, some of which are based on MLRun’s Function Hub:

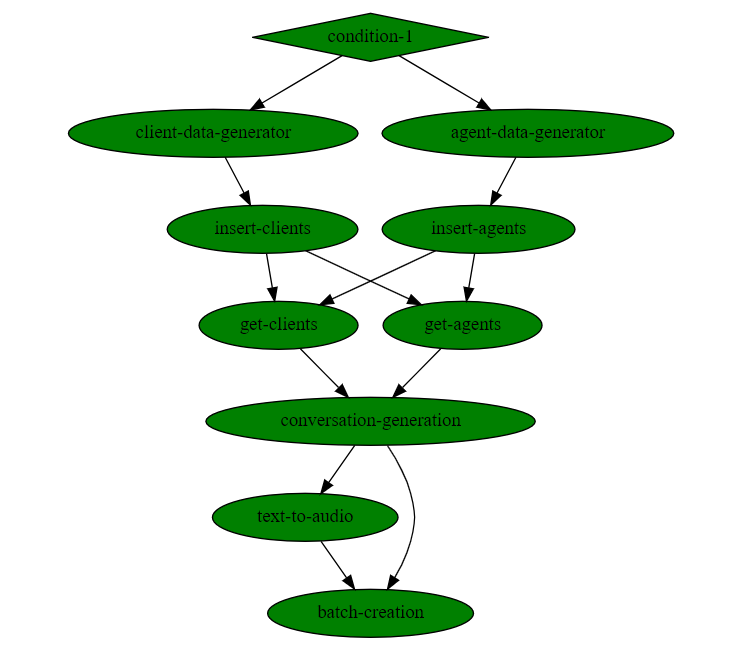

The resulting workflow will look like this:

As you can see, no code is required. More details on each step and when to use them, in the documentation.

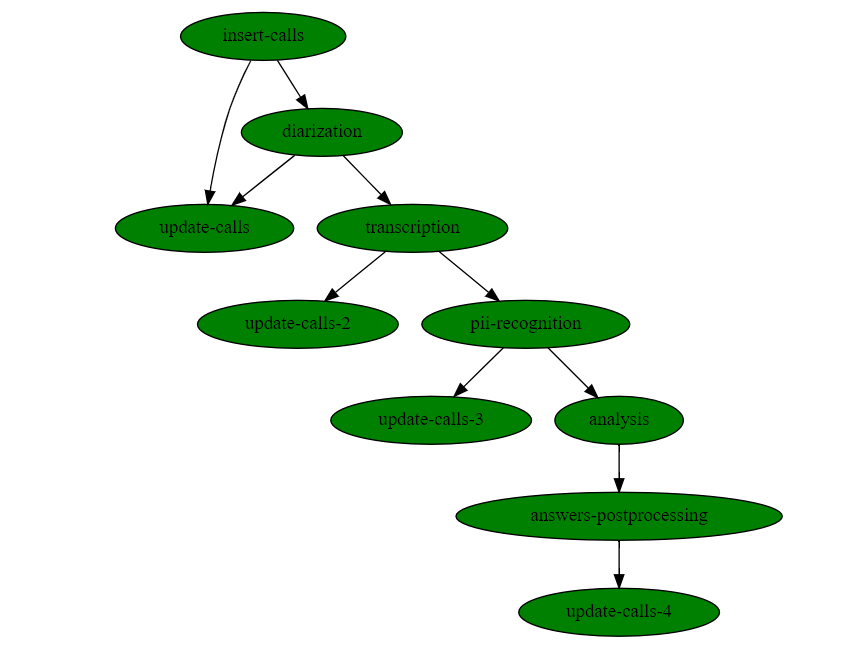

And it looks like this:

Similarly, no coding is required here either.

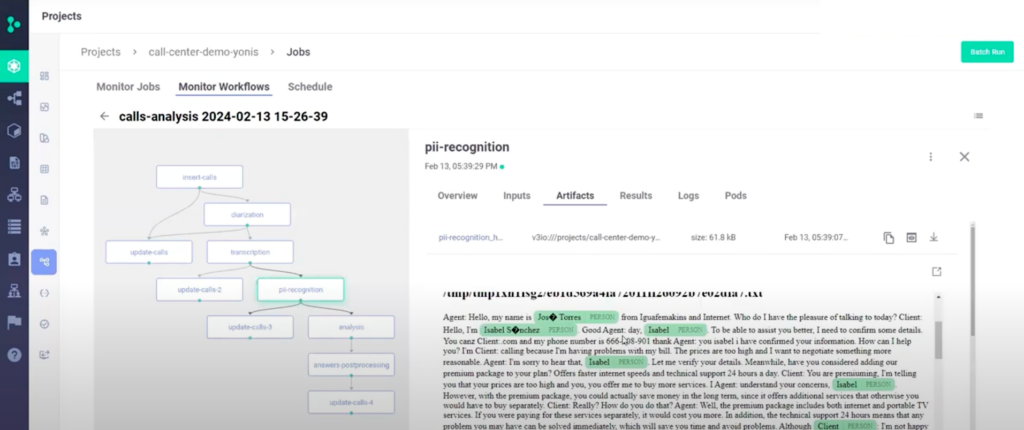

Here’s how some of the steps are executed:

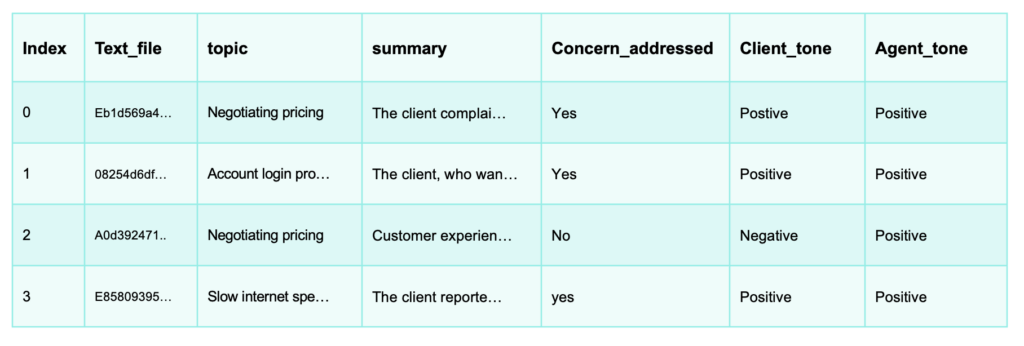

6. You can also use your database and the calls for developing new applications, like prompting your LLM to find a specific call in your call database in a RAG based chat app.To hear what a real call sounds like, watch the video of this tutorial.

In addition to simplifying the building and running of the pipelines, MLRun also allows auto logging, auto distribution and auto scaling resources.